In the News

Putting a Price Tag on Efficiency?

We hear the phrase often – work smarter not harder. Many of us have been through exercises in efficiency – simplified or “lean” workflow, moving one part of the business closer to another, clearing out redundant systems or documents, automating various processes.

We are so conditioned that a simple buzz phrase sounds like a winner: “This will make us more efficient”.

I am generally in favor of efficiency, when not trumped by matters such as employee health or animal welfare. I just want to ensure we make an informed decision and consider a true end-to-end assessment. I’ll explain my experiences with this, that include some telling metrics on task timing in a vivarium – something you might wish to do at your facility (see my request at the end of this blog).

Example: Laundry Day

Working from home has made it easier for me to keep up with laundry. When I am pressed for time, I can make the process much shorter and save myself time. Shirts get folded, pants get hung, but the socks? Let’s just dump them into a drawer. I can pick out matching socks in the morning. This action might save me 20 minutes – time that is better spent at the gym, keeping up with the garden, etc. At the end of that day, I really appreciate getting more done by being efficient with the laundry. The first few days that I need to pick out socks, the extra 30 seconds or so to find a match from a full pile is pretty easy. And I don’t notice that minute.

Several days into the cycle, now I am spending a couple minutes trying to find the good match. Soon enough I come to a realization: I do not have a good pair of clean socks today. I might have to settle for wearing gym socks out to dinner, and a reminder that when I get back home tonight, I need to do some laundry. ☹

This is what happens when we jump at the opportunity to save some time up front without considering the downstream implications. Over the course of time, I didn’t really net any time savings, I had to schedule a task without much lead time, and the quality of my dress code suffered. Was that worth the initial time I spent weeding the garden last week?

That is the analysis we need to do.

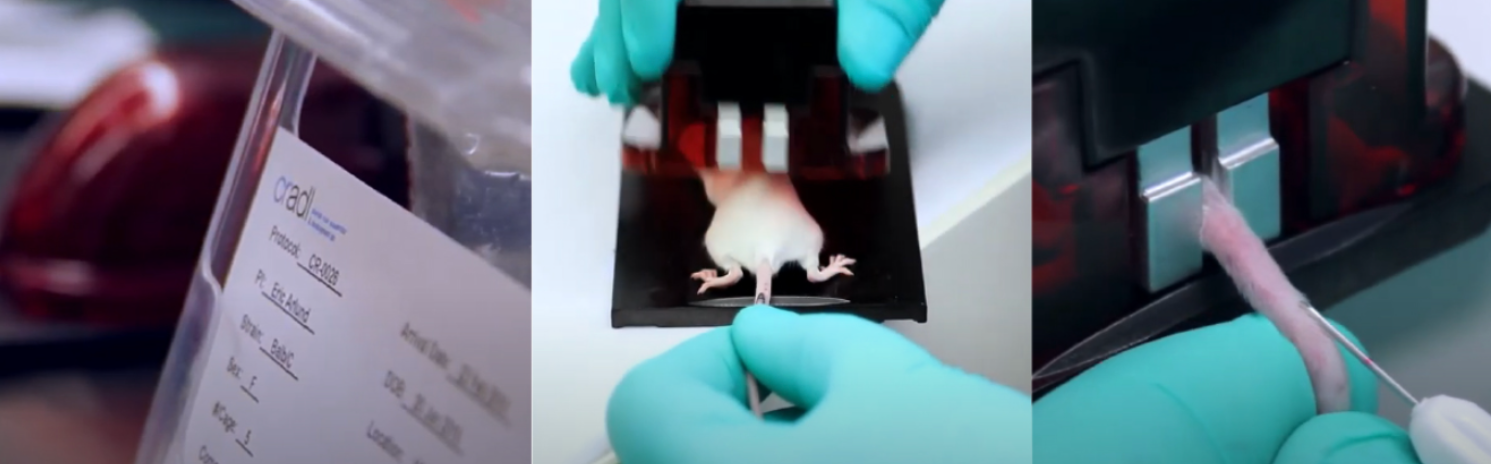

Case Study: Animal Identification

Most of us understand the need to properly identify our research animals. We can quickly grab a Sharpie marker, take each mouse out of a cage, and put 1 to 4 dots on their tail. Bingo, 5 mice identified (one of them has no mark). Super quick, and tomorrow this looks brilliant.

Those using B6 mice might have to opt for a quick ear punch. One left, one right, two left, one each, none. Bingo again, 5 mice ID’d in very little time. As long as everyone knows the code, we are good.

In these two examples, the technician is moving on to the next of many tasks on their schedule. Next door, another tech is still buzzing away putting tattoos on tails or injecting RFID microchips. Why spend a few dollars on mouse ID when quick labor can get the job done?

We don’t need to fast forward very far to see what is around the corner. Next week, someone has a cage of mice and only two mice have visible tail marks. Mice are such persistent groomers! Or, due to fighting, a few mice were isolated by the weekend staff. But the mice have the same code, so we can’t be sure who came from which cage. ☹ Well let’s get a fresh Sharpie and remark them all better, and try to figure out which torn ear punch is which. Our efficiency rating will suffer through this.

Has Anyone Actually Studied the Numbers?

Yes! One facility really wanted to know how much time they spent checking the ID of animals. No secrets, they suspected there were a few mistakes along the way so they wanted to look at accuracy too. They set up cages of mice, ID’d them by various methods, and recorded the time and accuracy of identifying them each week for the next six months.

On average, a cage (always 4 mice) took 36 seconds to read the tail tattoos. We don’t need to pick them up to read these, so naturally it is pretty easy. (The study required weighing the mice too, so each animal was handled anyway). RFID tagged mice were close, at 38 seconds per cage. The RFID scan is quick, but you do have to pick them up for that scan.

Mice that were identified by permanent marker? 51 seconds per cage to identify. It takes a little bit of time to look at the numbers and be sure.

Ear punch codes? 67 seconds per cage. Still pretty quick, but when you have to bring the animal up close enough to see both ears, it does add a little time. This study required a unique ID for each of the 16 mice per group, so there was some complexity to the ear punch code.

Seems similar to the early days of my sock story, no? All that time to put a tattoo on the tail just to save 15 to 30 seconds per cage.

Is that significant?

If a technician has a 100 cage workload, that’s 25 to 50 minutes spent each time – at least once a week – just reading the ID. I think saving an hour a week is worthwhile. And if we handle our mice more than once per week, or if the technician has more than 100 cages to care for … we start looking at significant amounts of time.

What About Accuracy?

In this case, management knew there was an occasional error in identifying mice. A few research protocols had uncovered this. So they analyzed the data point accuracy – 924 data points for each ID group.

The automated tattoo group had a 2.5% error rate. It was random – regardless of skill level, occasionally a person looked at 126 and reported 128, for example.

All the other visual ID methods – ear punch, ear tag, permanent marker … had error rates of 20% or greater throughout the study. Some of the groups suffered a 40% error rate when they considered that a lost ID meant the animal had to be pulled from the study. Statistical models show that when you have that little confidence in your data integrity, any conclusions are suspect. The real problem is, we don’t know which data points might be affected by misreading an ID, or even if any such mistakes happened on a particular study.

This evaluation became a case for adopting a more robust method of animal ID (the tail tattoo.) Researchers who value accuracy will appreciate these benefits of data accuracy, and welcome the time savings as simply “it is so much easier to read the tattoo.”

Unfortunately, the above data never made it to publication. Their legal department found it a little too sensitive to publish.

I tell this story not as a way to get you to change your mouse ID methods (though if you want to take a look at Labstamp). My goal is simply to encourage investigation into the metrics surrounding animal ID.

- How much time is spent reading the IDs of mice?

- How accurate is the team at reading each method deployed?

- Is there an incidence rate of veterinary treatments linked to any particular method? (infected ear tag anyone?)

- And if anyone is really serious, we could look at baseline pain / distress / behavior levels in mice based on their ID method.

If your facility has collected data on any of this, please reach out to me, I would love to learn your findings. If you are interested in running a Time and Accuracy study at your facility, I can help you set that up!

Author: Eric Arlund, August 2021 – please contact Eric at earlund@somarkinnovations.com if you would like to discuss any of the topics raised in this article.